GAM170 Reflection

I came into this course, wanting to become an independent app developer and starting a small studio making educational applications specialising in virtual reality (VR) and augmented reality (AR). My interest has always tended toward, making platform-agnostic applications as I already have a basic understanding of web-based technologies, such as HTML, CSS, and JavaScript, but wanted to learn to develop for the newer VR and AR technology coming to market because of the ability to create experiences not possible in the physical world. During the first weeks of my app synergies journey, it has become clear that the I need to focus on a few key skills, C# programming language, priority management, interaction in VR, and marketing are of particular importance to my personal growth as an app developer.

I chose C# as it is one of the more popular programming languages and was designed to be simple and easy to use. C# is also used in game development and is the primary scripting language used by Unity. While one of the most popular game engines now, Unity has also broadened its development platform beyond games to include new industries such as architecture, film, automotive, as well as VR and AR.

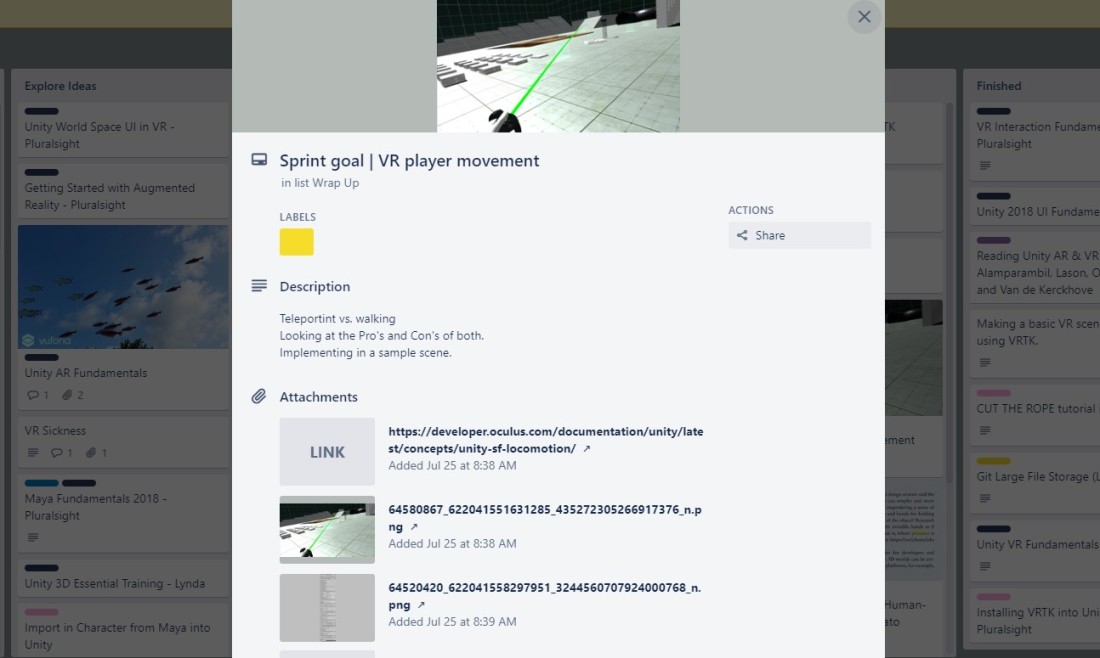

Also, C# is similar to other C programming languages like C, C++, and Java. Becoming technically proficient in C# makes learning these other languages more accessible and opens the door to future opportunity. My goal is to improve my C# skills using the Pluralsight courses and to make sprints for each chapter. The time frame of each sprint is eight hours over two weeks, and this allows me time to work on the weekly course modules. I have added these sprints to my Trello board allowing me to stay orgainsed. The goal is to start intermediate-level C# courses by mid-September.

From the beginning of the programme, we had been encouraged to explore and gather knowledge. By the time we had reached week three in the programme, I had already started on using Unity but was still interested in the Unreal engine. I was also interested in how 3D characters worked and so was trying to use the 3D program Maya with character rigging. I was beginning spread myself thin and wasn’t happy with the progress I had made. I was starting to feel a bit overwhelmed, thinking there was so much I didn’t know.

After a weekly support session, I took the advice of my advisor and began to focus on Unity because of its tight integration with C#. My priority going forward is to hone my Unity skills setting short sprints for the chapters from Pluralsight courses on Unity. By mid-September, my Unity skills should firmly be at the intermediate level. The goal is to build a working prototype for an interactive virtual reality character in Unity for the Oculus Rift virtual reality headset.

The another skill I focused on was interaction with a virtual environment. I experimented with Unity’s touch interaction system, using a virtual hand to pick up objects. Setting this up was a bit difficult because I didn’t understand how to correctly implement touch using the Oculus SDK and SteamVR SDK, from the two dominant market leaders in the VR domain. After some research, I found a quick way to implement touch using Virtual Reality Toolkit (VRTK). Using this toolkit with the C# scripting allows me to set up an interactive environment quickly, and the time saved can be spent on working to extend the depth of interaction by creating interesting scripts to handle haptic feedback.My goal is to create a Unity scene able to communicate with the Watson server. I have added it to my Trello board and set up a free IBM Cloud account to access Watson. During the session break and have planned to spend 10 hours on the initial setup and the success of the goal hinges on communicating with the speech to text server, and it able recognise verbal input through Unity.

Marketing, I believe is important not only from a professional standpoint but from a financial standpoint as well. Understanding who my audience is and how to reach users will require me to use qualitative methods and quantitative methods or a mixture of both. Also, creating persona from the collected data will help me better understand the audience and their motives. I think it’s essential to create a few personas as not everyone is going to have the same motives in using an app. I will focus on who the person is and how the app will fit into their life. I also need to think about how they will use it or access it, as well as what they expect to get out of the experience. Keep the persona relevant and the iterations back the persona should allow me to develop an app that my target audience will want to use. My goal is to create five user personas for a VR language learning app I want to develop. I have budgeted 10 hours and will post this on my Trello board. I will focus on four areas, the person, behaviors and wants, use and expectations. After creating the personas, I will gather feedback from friends and course mates, incorporating that into a finished persona deck.

Looking back on my personal and professional development, I think I have made quite a lot of progress from a personal perspective, but I can measure that by looking at what I have accomplished up to this point. I have made progress with C# and can make a basic Unity VR app and AR app for both Android and iOS. I have a much better understanding of the direction I want to take and learning how to judge what is essential for my career path from a professional point of view. I have been using Trello to track my progress but have let my blog slip. The blog is essential to my development and putting more effort in self-reflective practice will allow me to grow. I think personal case study was beneficial but having to sit down and look at five critical skills challenges and reflecting on them will help carry me through the more challenging parts of the program to come.

You must be logged in to post a comment.